Four factors that should underpin whether workloads should live in a public cloud.

The tech industry loves a good “pendulum swing” - rushing to adopt whatever is new and shiny, often with the promise of the new thing being bigger, better, faster or cheaper (or some combination of the three). Through the first part of this century, arguably two of the biggest trends in IT infrastructure have been virtualisation, followed quickly by cloud computing.

Cloud promised to be a panacea to all sorts of perennial challenges that IT faced:

- Agility in delivering the resources that the businesses needed

- Only paying for what was consumed

- Speed of deployment

- The staffing and running costs

And in many instances that, initially at least, seemed to hold true. What was fashionable, was also rational.

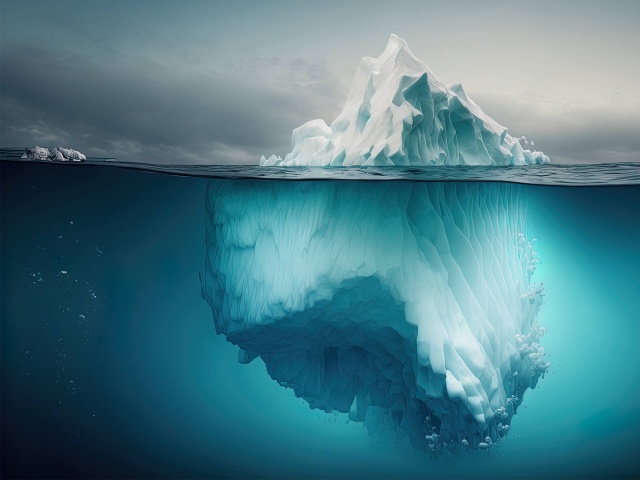

Of course, nothing is true forever, and today there doesn’t seem to be a serious industry watcher left that still ascribes a “100% public cloud” strategy as logical for anyone other than the smallest and/or simplest of organisations. The majority of organisations now in fact report pursuing a hybrid cloud strategy, with a significant number (often cited at around 25% of organisations, though I’ve seen numbers as high as 40%) actively repatriating workloads from the public cloud, returning them to infrastructure they control - whether that be in their own data centre or in a co-location facility operated by a partner.

Two questions really need addressing: why, and why now?

Why keep or return a workload to your private cloud?

When you boil it down there are really five rational reasons why you’d run a workload on your own cloud infrastructure, whether that’s in a data centre that you own, or, as is becoming increasingly popular, a rented space in a colocation facility:

1. Security, privacy, compliance

A recent study by researcher Vanson Bourne, based on interviews with nearly 1,500 respondents, cited security and data sovereignty as the number one reason that data and workloads were being repatriated from the public cloud. Of course, security, privacy and regulatory compliance are not exactly the same thing (I feel a follow-up post coming on), but what they do share in common is:

- the degree of granular control that is possible over your data and/or workload

- where it resides

- who has access to it

- when/ how it moves, and

- for compliance, as importantly, your ability to demonstrate all of this in a way that is acceptable to your regulators

Of course, we have the huge shift to remote working that the pandemic demanded. Through that time it’s likely that certain security controls and compliance requirements may have been overlooked, as organisations enacted emergency measures just to keep the lights on, and shift workloads to the cloud. Now as we move to a new normal, perhaps some of this repatriation is being better thought through, and compliance and/or data security require repatriation.

Data sovereignty has also become a much hotter topic over the last couple of years, spurred by numerous factors including security and privacy concerns, especially where state actors may be involved. In Europe, as an increasing number of both private and public organisations migrate data and workloads to the cloud, an overreliance on a small handful of (American) public cloud providers has also sparked concerns over both the concentration of power within the hands of just a few players, plus the requirement on such organisations to provide the US Government access to data under the auspices of the Patriot Act.

In fact, in a recent cloud study “improving security posture and meeting regulatory compliance” was cited as the top reason for data repatriation projects.

2. Performance / low latency

The second reason that many are considering moving data back on-prem. is all about performance. Let’s take machine learning as an example. Many machine learning applications are gathering, interpreting and making decisions on gathered data from literally millions of sensors. The collation, interpretation and decisions made on the resulting information gleaned from that data often need to occur in as near real-time as possible. The laws of physics simply don’t allow for a backhaul to a cloud or data centre located hundreds of miles away. Here, providing an edge infrastructure can be the only practical solution.

3. Legacy application support

Sometimes, in that fashionable rush to the cloud, some applications and data got caught up and lifted and shifted that had no business being there. Take, for example, a scenario where all the data storage required for an application got moved to the cloud, but the application or applications that need access to that data remained on-premises. While it was cheap on day one to move the data (as data ingress tends to be very inexpensive), the egress fees to get the data back out can quickly add up. Have a team of 50 people all accessing and changing the same data set multiple times a day and very quickly any initial cost savings are swamped.

And it’s not just the data. Some applications are just not built to be able to take advantage of the value that a move to the cloud can bring. If all that occurred in the initial shift to the cloud was the virtualisation of the physical server on which the application resided, then any expectations of performance elasticity and application portability between zones or regions may be a disappointment.

4. Cost control

The fourth and final factor to consider is cost control. For many organisations, this was the cornerstone of the initial rush to the cloud. The idea is, that by going “all in” on the public cloud, data centres could be completely shut down and the IT teams redeployed or let go. Or, that the pricing and economics of hyperscale could never be matched by any one organisation where it was not part of the core business.

Fast forward to the present day however, and rational assessment by CIOs around the globe has found that for many, those cost savings have not materialised. For the other three reasons we already covered, it’s the small minority of larger organisations that find themselves able to divest all infrastructure. Most will find themselves unable to close at least some of their data centres, and others will find themselves in long-term relationships with one or more co-location providers. The data centre operations teams they believed they would be able to retire have instead only been reduced in number, but additional staff have replaced them in “Cloud Centre of Excellence (CCoE) and/or FinOps disciplines dedicated to optimising and rationalising cloud spend.

While all of this has been going on, of course, we were working at bringing HyperCloud to market. Solving many of the challenges that drove the initial rush to the public cloud, deep in the technology we in turn, change the economics and viability of building and owning your own private cloud.

As fashionable is replaced with rational, as the pendulum stops swinging and finds equilibrium, private clouds will become ubiquitous.