When you’re maintaining an expansive host of internal and external static sites, there’s a lot of infrastructure work that needs to be done, but do you really need to do all of it again and again for every site? Our systems engineer Chris Lucas-McMillan recently completed a project to streamline a variety of general site infrastructure tasks.

At SoftIron, we have a lot of internal sites, and plans for many more. These sites are used for everything from collating internal product documentation to maintaining a single source of truth for branding elements and company processes. Add to that our public sites (such as this blog) and: that’s a lot of sites!

What these sites have in common:

- their staging and production code is stored in GitLab,

- their base infra is implemented via Terraform and configured with Ansible,

- security certificates are automatically created with Let’s Encrypt and managed with Certbot, and

- the static content is served via NGINX.

While the sites themselves may use different development frameworks and diverge significantly in their features, there’s a lot of overlap in their infrastructure setup and maintenance.

This includes generating preview builds for every development branch in our staging sites, including a nice visual Terraform pipeline integrated into GitLab’s merge request pages that enables one-click previews of staging builds and easy investigation into build errors. But setting up all this for every new site can eat up a lot of time.

So, I set out to build a static sites project - an ‘infrastructure as code’ Git repository - that could be utilized to fully automate our web infrastructure; create static site staging and production VMs, create and clean up site builds, and be automatically secured with Let’s Encrypt. It also would support custom error pages and password-restricted sites. And the test site that would utilize the static sites repo would be HyperWire - this blog!

In this post, I’ll share some details about how I approached the problem, alongside some key snippets of code.

Terraform configuration

As I was beginning the static sites project, our technical writer Wendy had already written the base Terraform to build the instances for the blog’s staging and production environments in HyperCloud. You can read through the Terraform configuration at Wendy’s post, How we built our blog with HyperCloud.

The Terraform that would be applied to future sites relying on the static sites infrastructure is much the same as what Wendy wrote, with some security group additions, and I also added Ansible user details in terraform/templates/ e.g.

[all:vars]

ansible_ssh_user=root

[staging]

${stg_hostname} ansible_host=${stg_ip}

Multi-project CI pipelines

I’ve got to credit another colleague here, Ben Brown, who had recently used a multi-project pipeline in another project. I’d never seen them before, and it completely blew my mind with new possibilities. They allow you to trigger CI pipelines in other projects in your GitLab instance, and pass variables and data between the two. I’d been desperate to find a reason to play with them, and they were the perfect fit for this project!

By using multi-project pipelines, we can have the CI specific to each site in its own repository, and then send the build output over the infrastructure repository along with some configuration to tell it how the site should be deployed.

Build pipeline

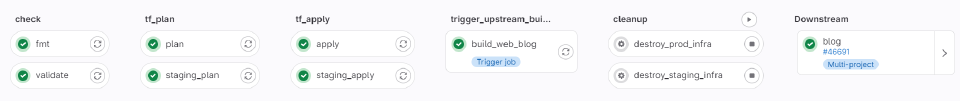

We build a visual view of the build pipeline for GitLab MRs that looks like this:

Each stage matching the Terraform pipeline stages defined in gitlab-ci.yml e.g.

stages:

- check

- tf_plan

- tf_apply

- configure

- deploy

- cleanup

Each stage is defined further down in the GitLab config yaml, with calls to the relevant Ansible playbooks, for example, here is the deploy stage for production, which is triggered when a website pipeline has built a site:

deploy:

image: python:latest

stage: deploy

extends:

- .prod

- .ansible

script:

- ansible-playbook configure.yaml

- ansible-playbook prod.yaml

environment:

name: production

url: $PROD_URL

on_stop: destroy

Another important element of getting everything working smoothly was ensuring clean-up was handled for each staging branch build, following my ansible clean-up playbook, triggered when a staging branch MR has been accepted and the new build has deployed to production:

.staging_cleanup:

image: python:latest

stage: cleanup

when: manual

extends:

- .staging

- .ansible

script:

- ansible-playbook cleanup.yaml

staging_cleanup:

extends:

- .staging_cleanup

variables:

SUBDOMAIN: $CI_COMMIT_REF_SLUG

environment:

name: staging/$CI_COMMIT_REF_SLUG

action: stop

rules:

- if: $CI_PIPELINE_SOURCE == "pipeline"

when: never

- !reference [.staging_rules, rules]

Naming staging builds based on their branches

When working in the staging environments for our various sites, we want to be able to preview the build for each site before merging to the main branch, which will then be pushed to production. Part of this involves creating subdomains for each branch that we can browse to in a site’s staging environment.

This is handled using GitLab’s CI/CD variables in my Ansible configuration. Below you can see the part that involves generating the subdomain for each branch’s preview build - each subdomain is based on the branch name, with any illegal characters becoming dashes.

.staging_deploy:

image: python:latest

stage: deploy

extends:

- .staging

- .ansible

script:

- ansible-playbook configure.yaml

- ansible-playbook staging.yaml

staging_deploy:

extends:

- .staging_deploy

variables:

SUBDOMAIN: $UPSTREAM_REF_SLUG

STG_DOMAIN: stg-infra.softironlabs.net

needs:

- job: staging_create_ansible_inventory

artifacts: true

- project: $UPSTREAM_PROJECT_PATH

job: $UPSTREAM_BUILD_JOB

ref: $UPSTREAM_REF_NAME

artifacts: true

rules:

- !reference [.upstream_staging_deploy_rules, rules]

environment:

name: $UPSTREAM_PROJECT_PATH/staging/$SUBDOMAIN

url: "https://$SUBDOMAIN.$UPSTREAM_PROJECT_PATH_SLUG.$STG_DOMAIN"

on_stop: staging_cleanup

Generating security certificates for staging build subdomains

Having a separate preview build for each branch is great, but it does require some extra work to avoid a slew of missing security certificate warnings in a user’s browser. The staging infrastructure is only accessible internally, so Let’s Encrypt is unable to use file-based validation, meaning we need to use DNS validation instead, using Certbot:

- name: Install Certbot

apt:

name:

- certbot

- python3-certbot-nginx

state: latest

This checks that our site already has a Let’s Encrypt folder and checks that an SSL exists:

- name: etc/letsencrypt exists

file:

path: /etc/letsencrypt

state: directory

- name: Check if a Staging SSL Exists

stat:

path: /etc/letsencrypt/live/{{ staging_domain }}/fullchain.pem

register: staging_ssl_stat

If the staging SSL doesn’t exist, We use Certbot in RFC2136 mode to request a certificate for our staging domain, and validate it with a temporary DNS record:

- name: Issue a Staging SSL

command: certbot certonly --dns-rfc2136 --dns-rfc2136-credentials /etc/letsencrypt/dns_rfc2136_credentials -d *.{{ staging_domain }} -d {{ staging_domain }} -vv --dns-rfc2136-propagation-seconds 30 -m {{ letsencrypt_notification_email }} --noninteractive --expand --agree-tos

when: not staging_ssl_stat.stat.exists

register: stg_ssl_issue_output

I’m using a lot of variables in the Ansible playbooks that were defined as variables in the CI pipeline. To make them available to Ansible, the variables are defined and sourced from the environment in ansible/group_vars/all.yaml for example:

domain: "{{ lookup('env', 'PROD_DOMAIN') }}"

commit_ref_slug: "{{ lookup('env', 'CI_COMMIT_REF_SLUG') }}"

latest_version: "{{ lookup('env', 'CI_COMMIT_SHORT_SHA') }}"

Our production yaml is actually quite similar, except that as an internet-facing site we can perform validation with an ACME challenge instead of having to go through additional steps with RFC2136:

- name: ACME Challenge

command: certbot certonly --standalone -d {{ domain }} -d {{ domain_alt }} -m challenge-ownership-address@softiron.com --noninteractive --expand --agree-tos

This was phase one

All of the above was enough to get started with all the infrastructure needed for setting up our blog site. Once it was all up and running, I added a few extra features and functions, such as triggering an upstream build from downstream, managing how staging builds handle future-dated posts, and more. All of which I’ll detail in my next post. Watch this space!