The future of infrastructure, or just another marketing term? Here’s my take on what ‘cloud native hardware’ actually entails - and how that impacts on scale, resourcing and application deployment.

What is ‘cloud native’?

As cloud became the dominant application deployment method in the 2010s, we saw a change in the way that teams used infrastructure.

From here on, the infrastructure had an API. Which is a bigger deal than you might think - consider where we were coming from. 30 years of data center growth left a swathe of GUIs, desktop Java apps, proprietary CLI tools, SOAP APIs, and XML RPC. All the detritus from several decades of incremental organic growth and quasi-abandoned products that were still vital to infrastructure teams.

Cloud brought a single, well-supported API that could provision infrastructure.

That includes VMs, but also storage, networking and many other technologies. What is more, that API was provided wholesale by someone else, instead of built like a house of cards on legacy hardware never intended for automation.

Native use of these APIs as part of the application developer’s toolkit was a natural progression. Cloud became more prevalent and developers became accustomed to treating compute, storage and network as the programming functions they used in the application stack itself. Birthed from this extension of programming into the infrastructure realm, cloud native development embraced the ubiquity of cloud APIs to extend the scale, resilience, intelligence and reliability of modern applications. Developers could now directly observe the state of servers instead of delegating that duty to a ticket queue in a server team help desk, which meant developers were able to automatically repair faults and rebuild the environment as needed. It is applications with these qualities of infrastructure automation as a core functionality which typically bear the term “cloud native”.

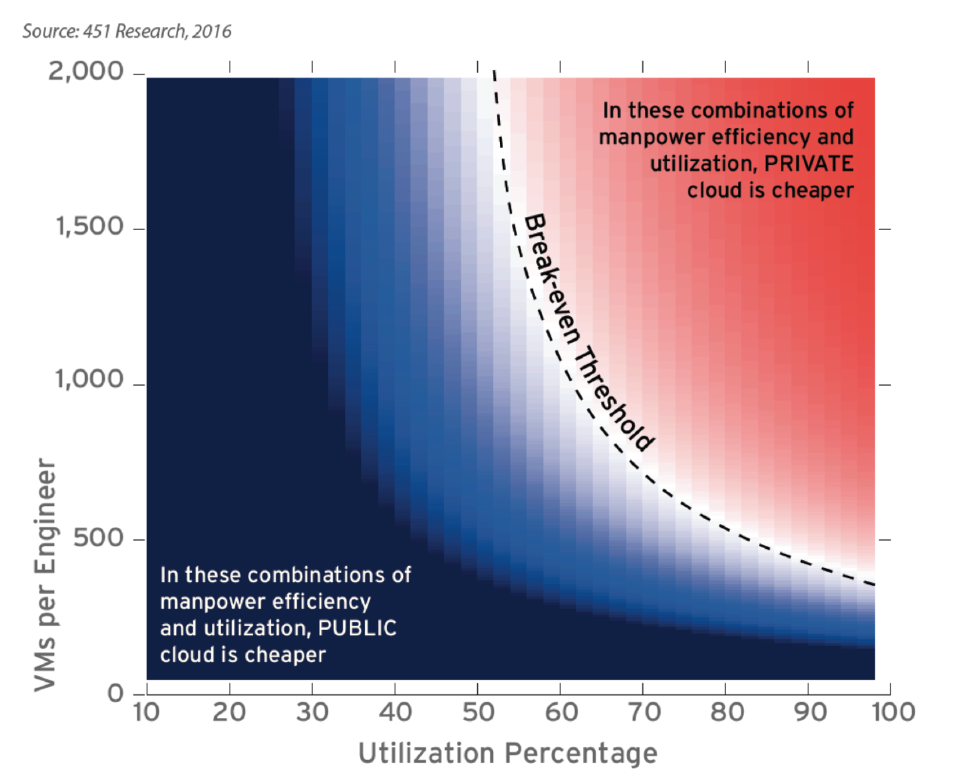

Private cloud can be cheaper than public cloud, but to make that happen customers must be able to manage a large number of instances per engineer. Instances per engineer and cloud utilisation are the key metrics here, a fact which has been known for some time. A private cloud consumer who wants to control their own destiny needs to deploy a system which allows engineers to easily manage large numbers of assets.

If each engineer can manage in excess of 1500 VMs then you have a good chance of making private cloud cheaper than public cloud.

451 Research subscribers can access live and up to date data that can be selected to benchmark, with quantity and optional comparison prices across multiple public cloud vendors at Cloud Price Index™

In summary, cloud native:

- requires fully embracing cloud as a platform to leverage improved scaling and lower operational cost per unit of service,

- allows companies to increase the number of virtual machines (or other services) supported by a single engineer, and

- is key to making private cloud cheaper than public cloud.

By embracing cloud native - and of course ensuring that the private cloud is being fully utilised - customers can obtain better and more cost effective results than public cloud.

Hardware automation, it’s not just about applications

In a former role, I tackled the problems of automating rack-scale solutions using converged infrastructure (CI) and hyper-converged infrastructure (HCI) hardware. Sounded great, but the reality was tough. Underneath the “markitecture” layer of slides and colourful diagrams, these solutions were not really integrated at all.

We had to:

- scrape web servers,

- wrap SSH calls to switches,

- deal with BMCs that would randomly crash instead of perform an operation and

- make all this look like a cohesive automated cloud solution.

And this was all because CI and HCI essentially re-purposed hardware that was designed for something else.

Walking the golden path of automating traditional converged infrastructure is painful. One foot wrong, and you are forced to manually intervene or wipe the entire solution and start again. Converged infrastructure integration is only ever skin-deep.

What we really wanted was a single universal API, which would enable infrastructure administrators to consume hardware as fluidly, simply and consistently as developers can consume XaaS offerings. Enabling cloud paradigms for both the staff using the solution and the staff supporting the solution.

The impact of ‘cloud native’ hardware on business value

Cloud native hardware addresses the CI/HCI challenges faced by your private cloud engineers. But beyond that, cloud native hardware offers a range of benefits that significantly contribute to the overall value of your solution:

If it were possible to address all of this via a single API then something amazing would be possible. Unfortunately because traditional server vendors prefer to avoid touching core BMC, switching and storage functionality they are forced to layer new APIs on top of old APIs.

Layering modern APIs on top of old APIs which themselves often run CLI commands is a house of cards. One wrong move and the entire solution falls down.

Because of inertia and market forces, vendors like HP, Dell, Cisco and IBM are forced to incrementally update existing server platforms without fixing the core problems. What was deemed good in 2003 is essentially what is being sold in 2023.

- Allows straightforward utility-style consumption

- Faster time to market

- Fewer barriers to consume the service yourself

- Replaces manual processes with automated jobs and pipelines

- Reduces human error

- Repeatable and consistent customer experience

- Enables staff to manage larger quantities of resources

- Lower operational cost per unit of service

- Improved uptime

- Reduces risk of outages by intelligently healing without human intervention

- Fewer support tickets

- Improved customer experience

- Natively supports scale-out as part of the DNA with minimal human effort

- Meet challenges of large-scale applications

- Lower operational costs

Traditional vendors are trapped by their pre-cloud solutions

If it were possible to address all these infrastructure challenges via a single API, then something amazing would be possible. Unfortunately, because traditional server vendors prefer to avoid touching core BMC, switching and storage functionality, they are forced to layer new APIs on top of old APIs.

Layering modern APIs on top of old APIs (which themselves often run CLI commands) builds a tenuous house of cards. One wrong move, and the entire solution falls down.

Unfortunately, because of inertia and market forces, vendors like HP, Dell, Cisco and IBM are forced to incrementally update existing server platforms without fixing the core problems. What was deemed good in 2003 is essentially still what is being sold in 2023.

Speeds and capacities increase but the platform design remains untouched - BMCs must retain support for ancient protocols like WS-MAN and these inherit bugs and security problems along with their old codebases.

Storage arrays are the nexus of that particular evil. Once intended to be black magic and support a thriving industry of professional support services, fiber channel storage arrays require manual intervention for many processes. Manual really means manual, here. Some of these systems include an entire Windows desktop system in the back of the machine, and an operator must physically walk up and use an application locally attached to the system to enter commands.

The designers of these systems felt that opening up access to an API would compromise the system. Luckily, those systems relied on aggregating large numbers of spinning disks. In the era of NAND a brand new 64Gb/s FC connection is the same speed as a single modern NVMe drive (gen5 x4) and lags far behind the capabilities of Ethernet. Such systems are less regularly seen in private cloud, their vendors preferring instead to target cash-rich customers like mainframe users.

Manual processes and ‘automation’ that isn’t really all that automated

One of the reasons for the decline in fiber channel storage arrays (apart from their failure to keep up with growing NAND speeds) is the fixation on manual installation and configuration processes which puts these system at odds with any sort of modern autoated deployment.

Laughably in the realm of storage systems such as EMC’s fiber channel offerings, the term ‘automation’ can sometimes mean automatically generating a ticket for a human to connect to the system, or even driving to the customer’s site!

Trapped in the halfway house

Awareness of the need to automate has led vendors like HP to seek automation solutions, and followers like Dell are attempting to copy the approach.

Reluctant to truly update the hardware, these vendors paper over the old APIs with a new API to create a “cloud-like” deployment process. These mingle manual and automated steps with limited observability and feedback; meaning that deployment steps can silently fail and require human intervention.

Temptation to “get it done” amongst traditional vendors leads to a reliance on hacks like using the BMC as a reverse proxy for host management. Combine this with a “Not Invented Here” mindset to software for a looming security risk!

Traditional solutions with another API slapped on top create inefficient architectures with single points of failure.

A new generation of cloud native hardware is emerging

Hypocritical dualism of hardware and software - where the beliefs that you hold as essential for one are utterly ignored for the other - cannot provide an optimal solution. Cloud consumption of software does not efficiently pair with manual deployment of hardware.

True scaling and resilience requires cloud native hardware. Hardware that natively supports management via simple and efficient APIs. Not just papering over and old CLI tool with a new API but a clean, purpose-designed solution.

To “do more with less” in the age of the cloud, IT departments must adopt intelligent hardware that is designed for purpose - not re-purposed legacy designs.

Back to business (value)

It’s easy to dismiss ‘cloud native’ as a buzzword. But the truth is, it represents a transformational paradigm shift for business. We are moving from the old world where vision and scale were limited to human hands typing, to one where intelligent infrastructure grows itself on command and heals automatically.

Cloud native hardware enables teams to do more. Manage more compute, run more applications, provide better customer experiences.